How we use camera traps to survey wildlife

For two years we’ve captured and analysed tens of thousands of images to learn as much as we can about The Quoin’s residents.

We’re determined to learn as much as we can about the animals that call The Quoin home.

However, when you’re a small team working across thousands of hectares, this is a difficult thing to do without technology. Even if we had the will, humans are smelly, noisy, large and noticeable, so it’s unlikely animals would allow us to meaningfully observe them for long periods of time, no matter how well we hid, or how still we stood.

Thankfully, we have a fleet of motion-activated cameras at our disposal — known in the science world as ‘camera traps’ — which can do the watching on our behalf.

Camera traps are an effective set-and-forget tool, remaining active 24/7 with a battery life of up to eight weeks. Compact, silent and discreet, they can also see at night using infrared flash technology. It's like having multiple extra pairs of eyes monitoring an area at all times.

But for all their benefits, camera traps have limitations too.

For one, they’re easily triggered by rustling grass, shrubs and trees, shadows and the sun moving across the sky. For example, we’ve collected cameras to find 20,000 images of majestic gum trees swaying in the wind and casting shadows across the undergrowth.

Equally, as the battery power and infrared flash limits the shutter speed to 1/30th of a second at night, you often get blurrier images of faster-moving animals. Wombats and devils are easy to detect, but small bouncy animals are not.

Camera traps are also one-directional, so if an animal is behind or beside the camera, you’ll never know. And, the data you get only offers a detailed view of a tiny part of a landscape.

But these limitations are not deal-breakers. By knowing what a camera trap can and can’t do, we’ve been able to strategically place them across the property, gleaning invaluable and actionable insights.

We use camera traps for a whole range of activities, including monitoring specific interventions in the landscape, such as building leaky weirs in old drainage channels.

We also use them to support other measuring and monitoring methods, such as our recent IR drone survey, during which we placed cameras in the same area as the drones flew, allowing us to correlate and contrast species distributions.

But perhaps most notably — and most relevant to this journal edition — we use them to learn what species are present on the property, how these species behave, where they congregate, and how abundant they are.

For two years now, we’ve conducted property-wide camera trap surveys, capturing and analysing tens of thousands of images to learn as much as we can about The Quoin’s animals.

This journal article walks you through the methods we’ve employed, details the process we’ve developed, and shares key insights we’ve gained along the way.

Our 2023-2024 camera survey

Context

We did an extensive camera-trap survey in summer 2022-2023 to help us develop our baseline Natural Capital Account.

Our goal was to measure the species richness (how many different species are present) of native terrestrial mammals at The Quoin, and then compare these numbers to what we’d expect to see pre-colonisation.

2023-2024

Last summer, we repeated the survey, with the goal of deepening our insights.

For four weeks, 20 Swift Enduro cameras were deployed across the property, taking photos, day and night. When a camera is triggered, three photos are taken at one second intervals, followed by a one minute delay before the camera can be triggered again. Four weeks is a reliable estimate — while our 12 Ni-MH batteries could technically last for months in an environment with fewer triggers, the high frequency of activity at The Quoin means they typically need recharging after around 8 weeks.

For every survey, we’ve also spread approximately 100ml of tuna oil directly in front of each camera, which we'll continue to do for consistency. As we are measuring species richness rather than behaviour, using baits to draw in a maximum diversity of animals is an acceptable approach.

In total, these cameras captured ~210,000 raw images, which yielded ~43,000 images of animals.

Processing

Once we had ~210,000 images, our next step was to process the images, and identify the animals. Here’s how we did it…

First, we used EcoAssist to classify images as detecting ‘animal’, ‘person’, ‘vehicle’ or ‘empty’, with a confidence value for each detection. This process is important as you don’t want to try to identify tens of thousands of blank images. EcoAssist has a simple interface that then allows you to move files into folders based on detections. You can set a minimum confidence for the detection too. For example, a really clear image of a kangaroo might be detected with 90% confidence, but a vaguely macropodal blob in the foliage might only get 40%. To be safe, we selected images that were detected with over 30% confidence.

We then did a quick manual sense-check to see if the folders really did contain the detected type. On the whole, the program worked well, with the exception of a few human-esque plants waving in the wind and sneaking through as ‘animals’. We moved these to an ‘empty’ folder. In the end, about 20% of our images were marked as ‘animal’ (taking up 40GB).

Before we uploaded all these ‘animal’ images to Wildlife Insights, we made two changes: adjusting the filenames to avoid duplicates, and manually adding geotags to each image. Then, we were able to let Wildlife Insights work its magic, using machine learning to identify the species in each photo.

Wildlife Insights successfully identified many of our images and saved us a lot of time, but we still needed to check them manually and add our own corrections and identifications. The inaccuracies stem from two key factors. First, since Wildlife Insights is a global project, the machine-learning models perform best with distinctive species that are well-represented in the global dataset.

This means some species — kangaroo, wombat and echidna — are reasonably easy to automatically identify, while others — quoll, bandicoot, potoroo and pademelon — still need humans to interpret. Second, some of our species are genuinely hard to distinguish. For example, a blurry night-time image could show a small wallaby or a big pademelon.

As with all artificial intelligence and machine learning, this is a field which is moving fast. As new models are built using increasingly large datasets, Tasmanian animals will be easier to identify. In addition, we think there are opportunities to use video rather than still images (many of our animals have distinctive gaits), and improvements in camera technology will mean that automated species recognition will improve.

Want even more detail?

For a very detailed guide to processing camera-trap images, read our playbook.

Gleaning insights

What we saw

Here are the 11 native mammals we photographed in order of how frequently we saw them:

Bennett’s wallaby (Notamacropus rufogriseus)

Forester kangaroo (Macropus giganteus)

Pademelon (Thylogale billardierii)

Tasmanian devil (Sarcophilus harrisii)

Brushtail possum (Trichosurus vulpecula)

Wombat (Vombatus ursinus)

Spotted-tailed quoll (Dasyurus maculatus)

Southern brown bandicoot (Isoodon obesulus)

Echidna (Tachyglossus aculeatus)

Eastern barred bandicoot (Perameles gunnii)

Eastern quoll (Dasyurus viverrinus)

We also captured three non-native species:

Fallow deer (Dama dama) (third most common overall)

Feral cat (Felis catus)

Rabbit (Oryctolagus cuniculus)

What we learnt

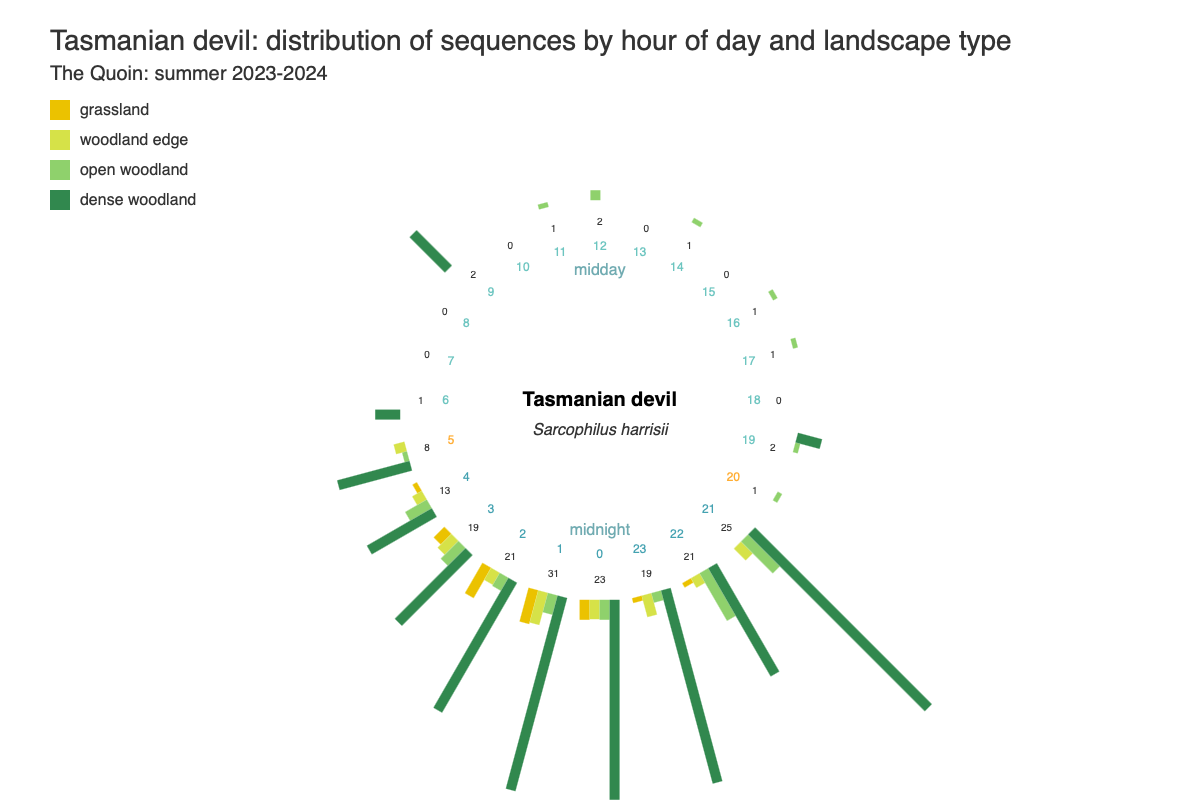

In order to draw meaning from our photographs, we assigned four landscape types to each camera-trap location:

Grassland (open areas, both natural and as a result of human clearing);

Woodland edge (the interface between the grassland and the forest);

Open woodland (where the understory allows easy passage and good visibility); and

Dense woodland (which has a thick understory).

We can then analyse sequence counts by landscape count to learn when and where we’re likely to see different animals (such as the Bennett’s wallaby and Tasmanian devil, which are both featured below).

What’s next?

Our Natural Capital Account will be formally resurveyed every five years to enable comparison to our benchmarking survey in 2022-2023. In between, we choose to do comprehensive surveys to better understand the landscape in which we’re working and inform our regenerative activities. This includes continued annual surveys at the same locations used in the first Natural Capital Account survey, and also targeted surveys to answer specific questions or to guide property management plans.

Our current 2024-2025 survey concentrates on smaller marsupials — such as quoll, potoroo, bandicoot, and, if we're lucky, bettong. To maximise our chances of photographing these animals, we’ve sacrificed some field of view and placed our camera traps lower in the dense undergrowth. Already, we’ve photographed potoroos, which indicates this strategy is working.

In 2027-2028 we’ll do a repeat of the Natural Capital Account survey, from which we hope to see a positive shift in species richness. Our 2022-2023 Natural Capital Account didn’t include eastern quolls, but since then we've had some isolated sightings. We’ll be participating in a quoll translocation project later this year, and hope to soon have established populations on the property.

Looking ahead, our camera traps will also become increasingly handy as we kick off our first restoration project.

Our restoration strategy is to plant 4㎡ vegetation islets that mimic nearby reference habitats in degraded areas. There will be 72 vegetation islets planted at a rate of 10 plots per hectare to improve landscape connectivity within the restoration zone. Then, we’ll use fencing that stops deer, wallabies and possums from eating our seedlings while they grow big enough to fend for themselves. In time, these vegetation islands will become places for small animals to shelter and feast, before leaving trails of seeds in their wake, some of which will germinate and grow, reconnecting these vegetation islands and kicking off a revegetation cycle.

As you can see, nature is our project’s number-one stakeholder. Our success relies on watching and learning from birds, bandicoots, potoroos, bettongs and quolls alike. Camera traps are an invaluable tool in our kit to help us do this.

It’s likely we’ll have some successes, some hiccups and some failures, but thanks to our ability to monitor our interventions, every outcome will be a lesson.

This article was written by: Bronte McHenry

And edited by: Andrew Rettig, Andrew Wenzel, Karina West, Lisa Miller and Michael Honey

Such awesome work♥️ wondering what the deer/rabbit management looks like and if you've seen any impact of those efforts in the survey data? That would be such a rewarding outcome to see their decline correlated with incline in natives.